This article focus on the back propagate in CNN and its math foundation.

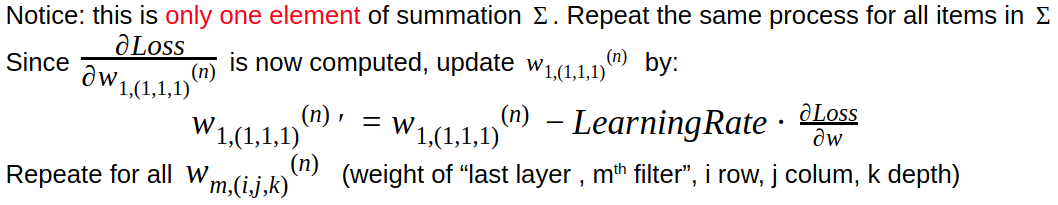

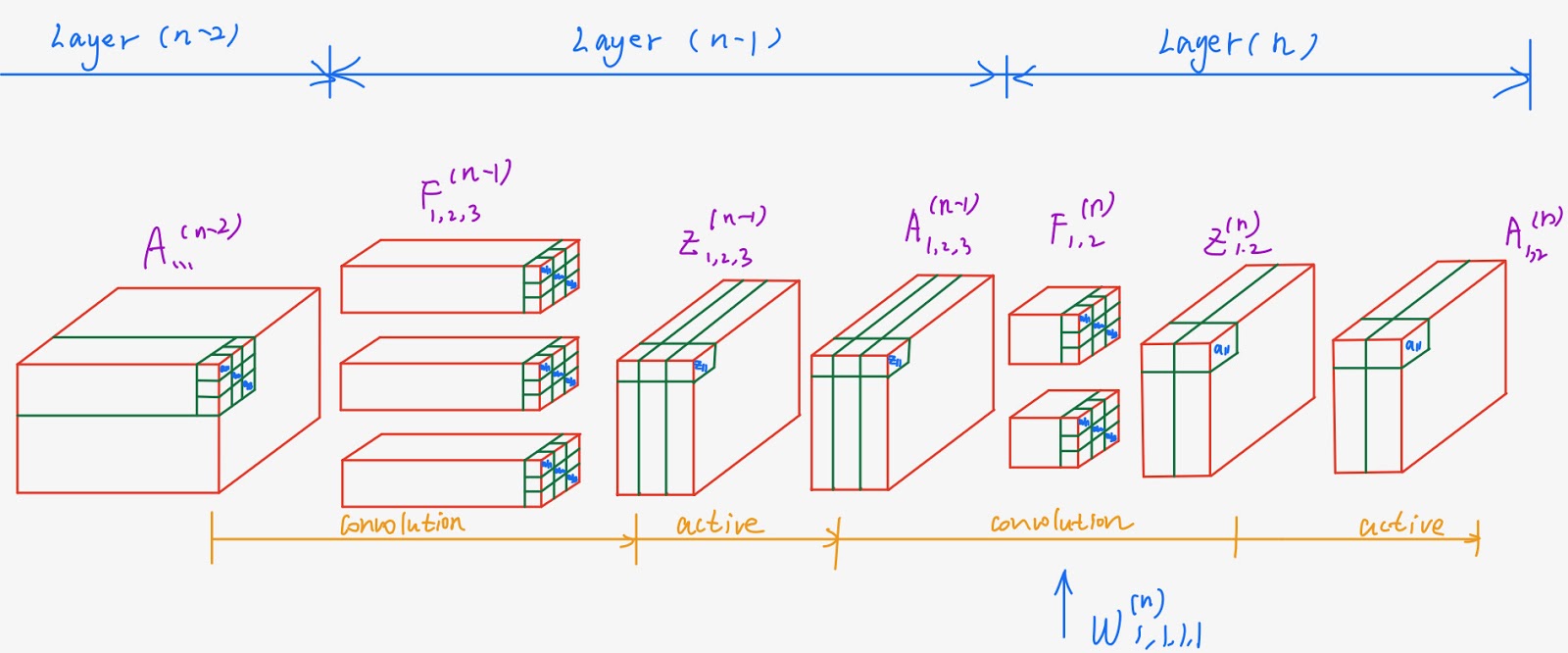

The following picture shows the last 3 layer of CNN. This CNN has n layers totally. And we will demonstrate the back propagate and weight updating process of on it.

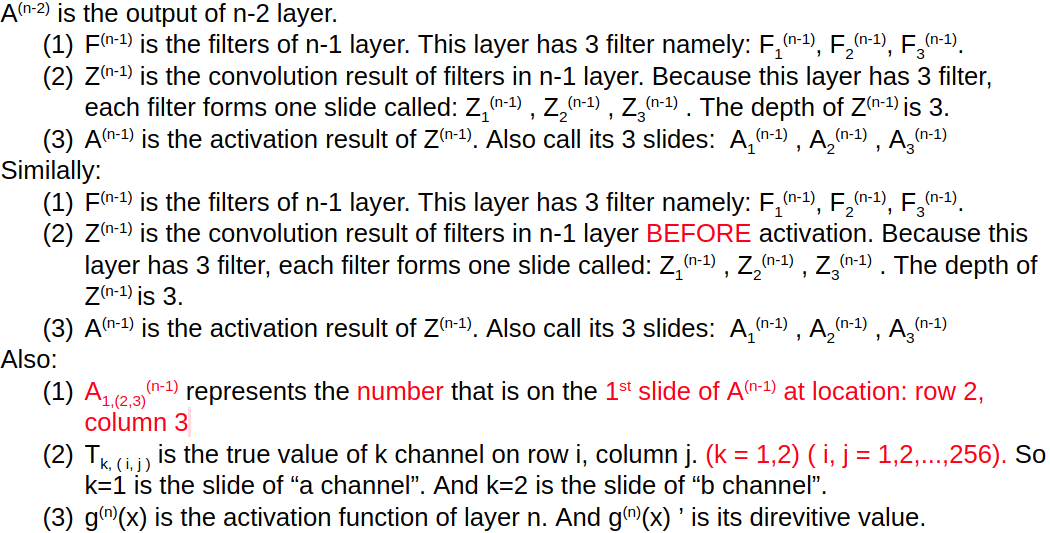

Important Notations:

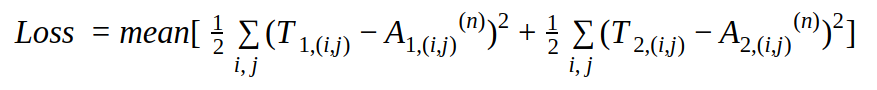

Then the Loss function is:

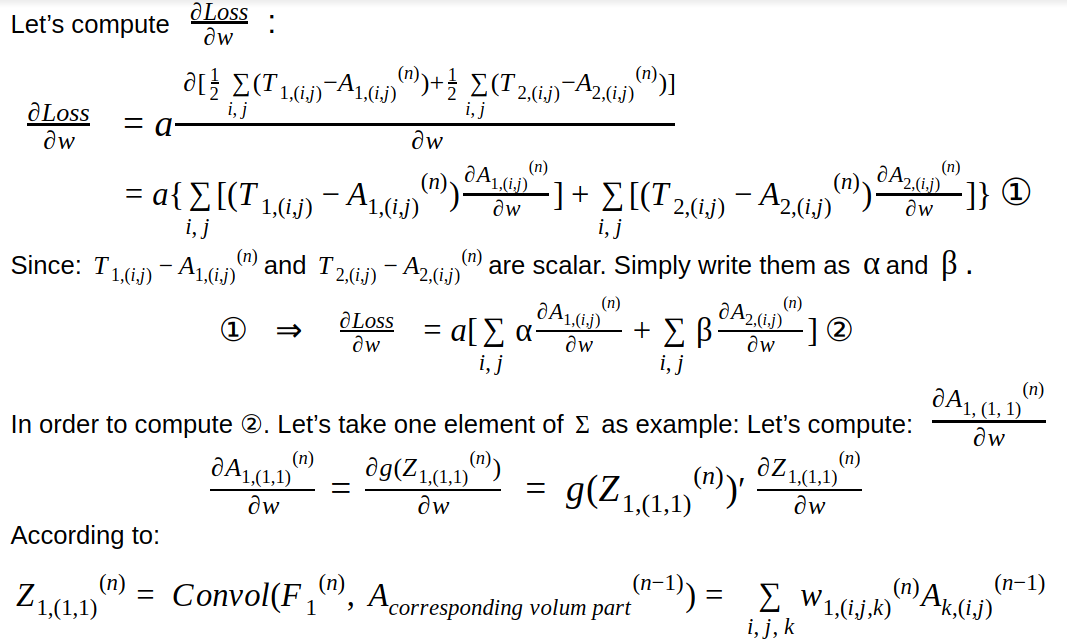

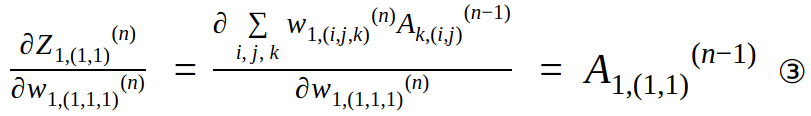

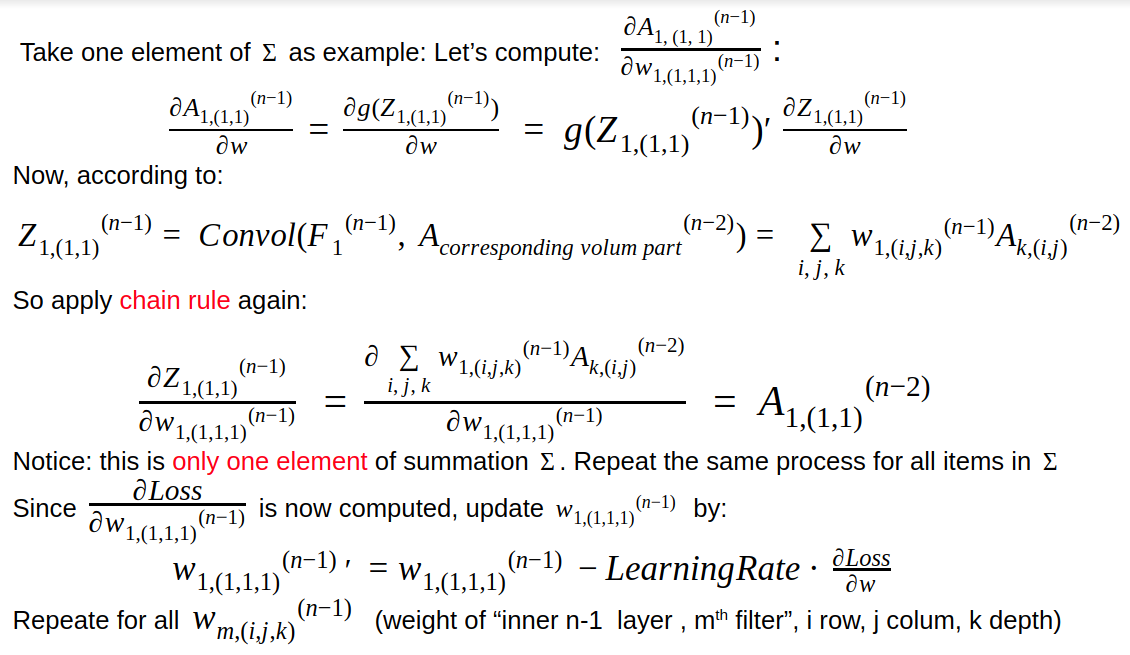

Update the weights of last layer (layer n)

First, let’s update the weights of last layer. That is: update all parameters of the 2 filters of layer n.

So apply chain rule again:

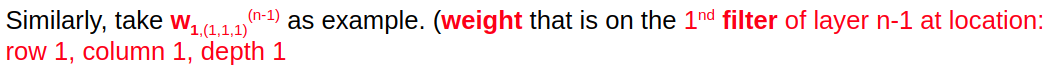

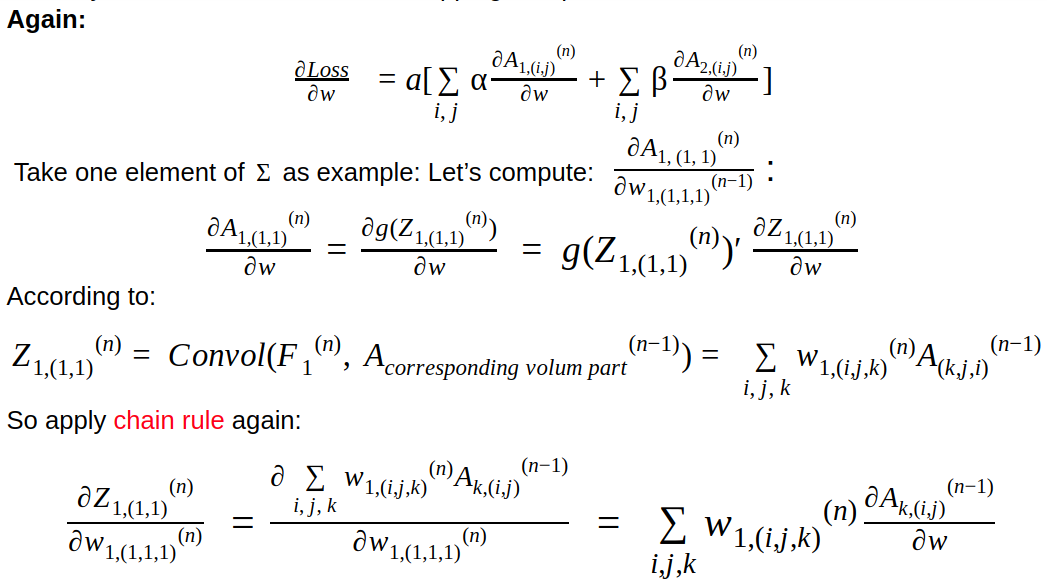

Update the weights of inner layer (eg: layer n-1)

Second, let’s update the weights of inner layers (eg: layer n-1). That is: update all parameters of the 3 filters of layer n-1.

Instead of stopping at equation ③, we have to continue:

Convergence

The network reaches a convergence when the outcome of Loss function is stably less than a determined value

Disclaimer:

This is only a study note. Correctness is not guaranteed

Original work. Error correction and Forwarding is welcomed.

.JPG)